💡 What's new in txtai 6.0

Sparse, hybrid and subindexes for embeddings, LLM improvements

txtai 6.0 brings a number of major feature enhancements. Highlights include:

Embeddings

Sparse/keyword indexes

Hybrid search

Subindexes

Streamlined methods

Large Language Models (LLMs)

Automatically instantiate the best available underlying model

Pass through parameters enabling immediate support as features are released upstream

These are just the big, high level changes. There are also many improvements and bug fixes.

This article will cover all the changes with examples.

Standard upgrade disclaimer below

6.0 is one of the largest, if not largest releases to date! While almost everything is backwards compatible, it's prudent to backup production indexes before upgrading and test before deploying.

Install dependencies

Install txtai and all dependencies.

# Install txtai

pip install txtai[graph] datasets

Sparse indexes

While dense vector indexes are by far the best option for semantic search systems, sparse keyword indexes can still add value. There may be cases where finding an exact match is important or we just want a fast index to quickly do an initial scan of the dataset.

Unfortunately, there aren't a ton of great options for a local Python-based keyword index library. Most of the options available don't scale and are highly inefficient, designed only for simple situations. With 6.0, txtai has added a performant sparse index component with speed and accuracy on par with Apache Lucene. A future article will discuss the engineering behind this.

Let's take a look. We'll use a prompt dataset on the Hugging Face Hub for all examples.

from datasets import load_dataset

import txtai

# Load dataset

ds = load_dataset("fka/awesome-chatgpt-prompts", split="train")

def stream():

for row in ds:

yield f"{row['act']} {row['prompt']}"

# Build sparse keyword index

embeddings = txtai.Embeddings(keyword=True, content=True)

embeddings.index(stream())

embeddings.search("Linux terminal", 1)

[{'id': '0',

'text': 'Linux Terminal I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 0.5932681465337526}]

And there it is, a keyword index!

Couple things to unpack here. First, for those familar with txtai, notice that only a text field was yielded in the stream method. With 6.0, when ids aren't provided, they are automatically generated.

Next notice the score. Those familar with keyword scores (TF-IDF, BM25) will notice that the score seems low. That is because with a keyword index, the default score is normalized between 0 and 1.

More on these items later.

Hybrid Search

The addition of sparse indexes enables hybrid search. Hybrid search combines the results from sparse and dense vector indexes for the best of both worlds.

# Build hybrid index

embeddings = txtai.Embeddings(hybrid=True, content=True)

embeddings.index(stream())

embeddings.search("Linux terminal", 1)

[{'id': '0',

'text': 'Linux Terminal I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 0.6078515601252442}]

Simple change with big impacts. This new index now has both a sparse and dense (using default sentence-transformers/all-MiniLM-L6-v2 model) index. These scores are combined into a single score as seen above.

The scoring weights (also known as alpha) control the weighting between the sparse and dense index.

embeddings.search("Linux terminal", 1, weights=1)

[{'id': '0',

'text': 'Linux Terminal I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 0.6224349737167358}]

embeddings.search("Linux terminal", 1, weights=0)

[{'id': '0',

'text': 'Linux Terminal I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 0.5932681465337526}]

A weight of 1 only uses the dense index and 0 only uses the sparse index. Notice the score with weight = 0 is the same as the sparse index query earlier.

Subindexes

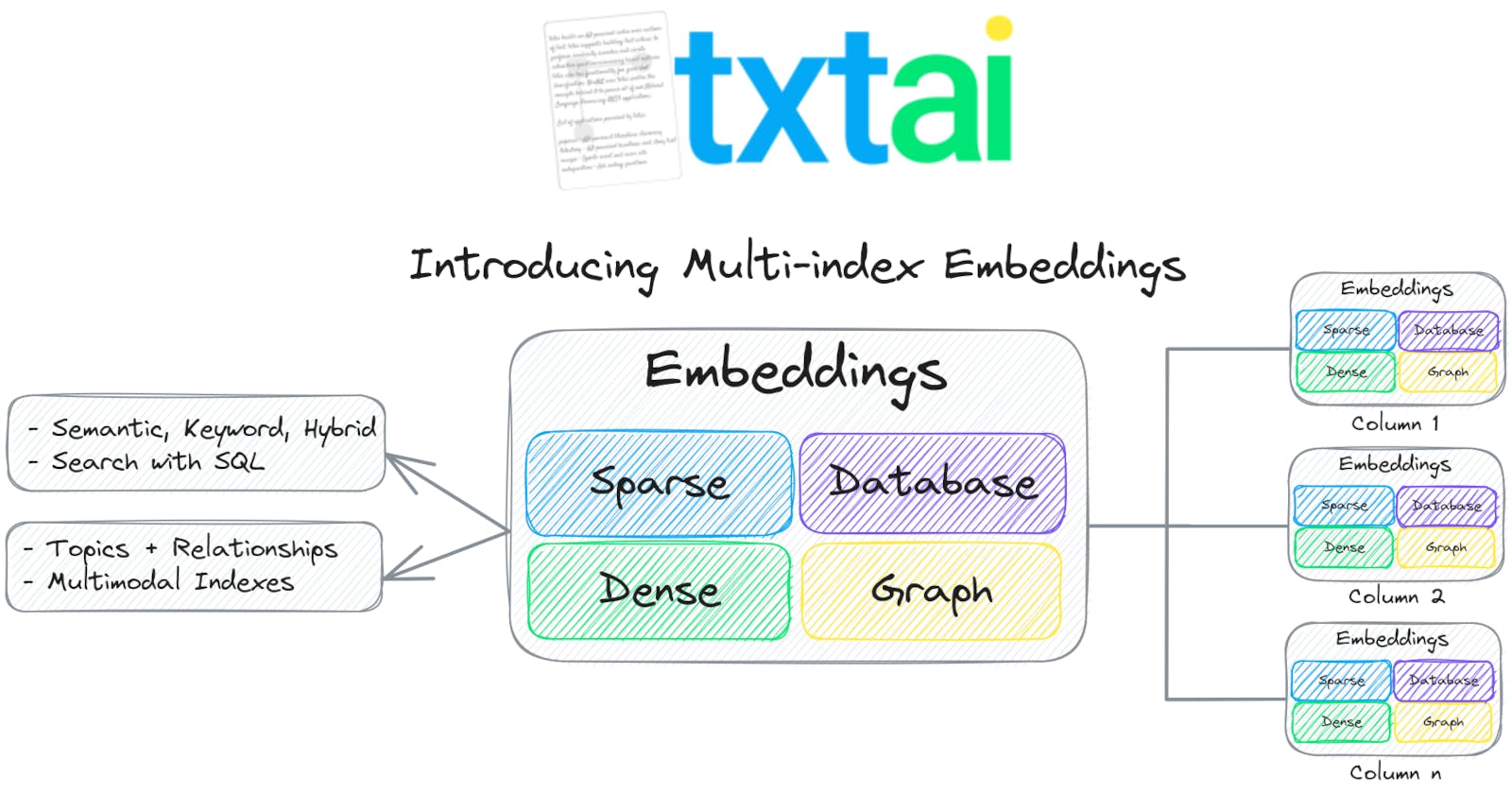

While sparse and hybrid indexes are great new features, the prize of this release is the addition of subindexes. Subindexes will add a host of new ways to build txtai embeddings instances. Let's give a brief intro here.

# Build index with subindexes

embeddings = txtai.Embeddings(

content=True,

defaults=False,

indexes={

"sparse": {

"keyword": True

},

"dense": {

}

}

)

embeddings.index(stream())

# Run search

embeddings.search("select id, text, score from txtai where similar('Linux terminal', 'sparse') and similar('Linux terminal', 'dense')", 1)

[{'id': '0',

'text': 'Linux Terminal I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 0.6078515601252442}]

embeddings.search("select id, text, score from txtai where similar('Linux terminal', 'dense')", 1)

[{'id': '0',

'text': 'Linux Terminal I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 0.6224349737167358}]

embeddings.search("select id, text, score from txtai where similar('Linux terminal', 'sparse')", 1)

[{'id': '0',

'text': 'Linux Terminal I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 0.5932681465337526}]

Notice how the scores are the same as above. The three searches above run a hybrid search, dense and sparse search. This time though it's using subindexes. The top-level Embeddings only has an associated database.

Each of the sections in the indexes is a full embeddings index supporting all available options. For example, let's add a graph subindex.

# Build index with graph subindex

embeddings = txtai.Embeddings(

content=True,

defaults=False,

functions=[

{"name": "graph", "function": "indexes.act.graph.attribute"}

],

expressions=[

{"name": "topic", "expression": "graph(indexid, 'topic')"},

],

indexes={

"act": {

"keyword": True,

"columns": {

"text": "act"

},

"graph": {

"topics": {}

}

},

"prompt":{

"columns": {

"text": "prompt"

}

}

}

)

embeddings.index(ds)

# Run search

embeddings.search("select id, act, prompt, score, topic from txtai where similar('Linux terminal')", 1)

[{'id': '0',

'act': 'Linux Terminal',

'prompt': 'I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 0.6382951796072414,

'topic': 'terminal_linux_sql'}]

Notice the new topic field added to this query. That comes from the graph index, which runs topic modeling. Also notice that two indexes for two different columns are added.

Note that graph indexes are different in that they depend on a sparse or dense index being available. That is how the graph is automatically constructed. For good measure, let's add the graph to a dense index.

# Build index with graph subindex

embeddings = txtai.Embeddings(

content=True,

defaults=False,

functions=[

{"name": "graph", "function": "indexes.act.graph.attribute"}

],

expressions=[

{"name": "topic", "expression": "graph(indexid, 'topic')"},

],

indexes={

"act": {

"path": "intfloat/e5-small-v2",

"columns": {

"text": "act"

},

"graph": {

"topics": {}

}

},

"prompt":{

"path": "sentence-transformers/all-MiniLM-L6-v2",

"columns": {

"text": "prompt"

}

}

}

)

embeddings.index(ds)

# Run search

embeddings.search("select id, act, prompt, score, topic from txtai where similar('Linux terminal')", 1)

[{'id': '0',

'act': 'Linux Terminal',

'prompt': 'I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 1.0,

'topic': 'linux_terminal'}]

Almost the same as above except the topic is different. This is due to the grouping of the vector index. Notice how the act column and prompt column are both vector indexes but specify different vector models. This opens up another possibility of weighting not only sparse vs vector but different vector models.

embeddings.search("select id, act, prompt, score from txtai where similar('Linux terminal', 'act') and similar('Linux terminal', 'prompt')", 1)

[{'id': '0',

'act': 'Linux Terminal',

'prompt': 'I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'score': 0.7881423830986023}]

As always, everything discussed so far is also supported with txtai application instances.

# Build index with graph subindex

app = txtai.Application("""

writable: True

embeddings:

content: True

defaults: False

functions:

- name: graph

function: indexes.act.graph.attribute

expressions:

- name: topic

expression: graph(indexid, 'topic')

indexes:

act:

path: intfloat/e5-small-v2

columns:

text: act

graph:

topics:

prompt:

path: sentence-transformers/all-MiniLM-L6-v2

columns:

text: prompt

""")

app.add(ds)

app.index()

app.search("select id, act, prompt, topic, score from txtai where similar('Linux terminal', 'act') and similar('Linux terminal', 'prompt')", 1)

[{'id': '0',

'act': 'Linux Terminal',

'prompt': 'I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when i need to tell you something in english, i will do so by putting text inside curly brackets {like this}. my first command is pwd',

'topic': 'linux_terminal',

'score': 0.7881423830986023}]

Streamlined methods

Much of this has been covered already but a number of changes were added to make it easier to search and index data. The existing interfaces are all still supported, this is all about ease of use.

See the code explanations below.

# Top-level import includes Application and Embeddings

import txtai

app = txtai.Application("""writable: False""")

embeddings = txtai.Embeddings()

# Ids are automatically generated when omitted

embeddings.index(["test"])

print(embeddings.search("test"))

# UUID ids are also supported - use any of the methods in https://docs.python.org/3/library/uuid.html

embeddings = txtai.Embeddings(autoid="uuid5")

embeddings.index(["test"])

embeddings.search("test")

[(0, 0.9999998807907104)]

[('4be0643f-1d98-573b-97cd-ca98a65347dd', 0.9999998807907104)]

Large Language Models (LLMs)

While the bulk of the changes in this release came with the embeddings package, LLMs also have important changes that make it easier to use.

import torch

from txtai import LLM

# Create model and set dtype to use 16-bit floats

llm = LLM("tiiuae/falcon-rw-1b", torch_dtype=torch.bfloat16)

print(llm("Write a short list of things to do in Paris", maxlength=55))

- Visit the Eiffel Tower.

- Visit the Louvre.

- Visit the Arc de Triomphe.

- Visit the Notre Dame Cathedral.

- Visit the Sacre Coeur Basilica.

The new LLM pipeline automatically detects the type of model and loads it using the best available method.

The pipeline framework now passes through keyword arguments to the underlying methods, which adds support for new Hugging Face features automatically as they are released.

Wrapping up

This article gave a quick overview of txtai 6.0. Updated documentation and more examples will be forthcoming. There is much to cover and much to build on!

See the following links for more information.