This article covers the transcription of audio files to text using models provided by Hugging Face.

Install dependencies

Install txtai and all dependencies. Since this article is using optional pipelines, we need to install the pipeline extras package. We'll also demonstrate running this pipeline through the API.

pip install txtai[api,pipeline]

# Get test data

wget -N https://github.com/neuml/txtai/releases/download/v3.5.0/tests.tar.gz

tar -xvzf tests.tar.gz

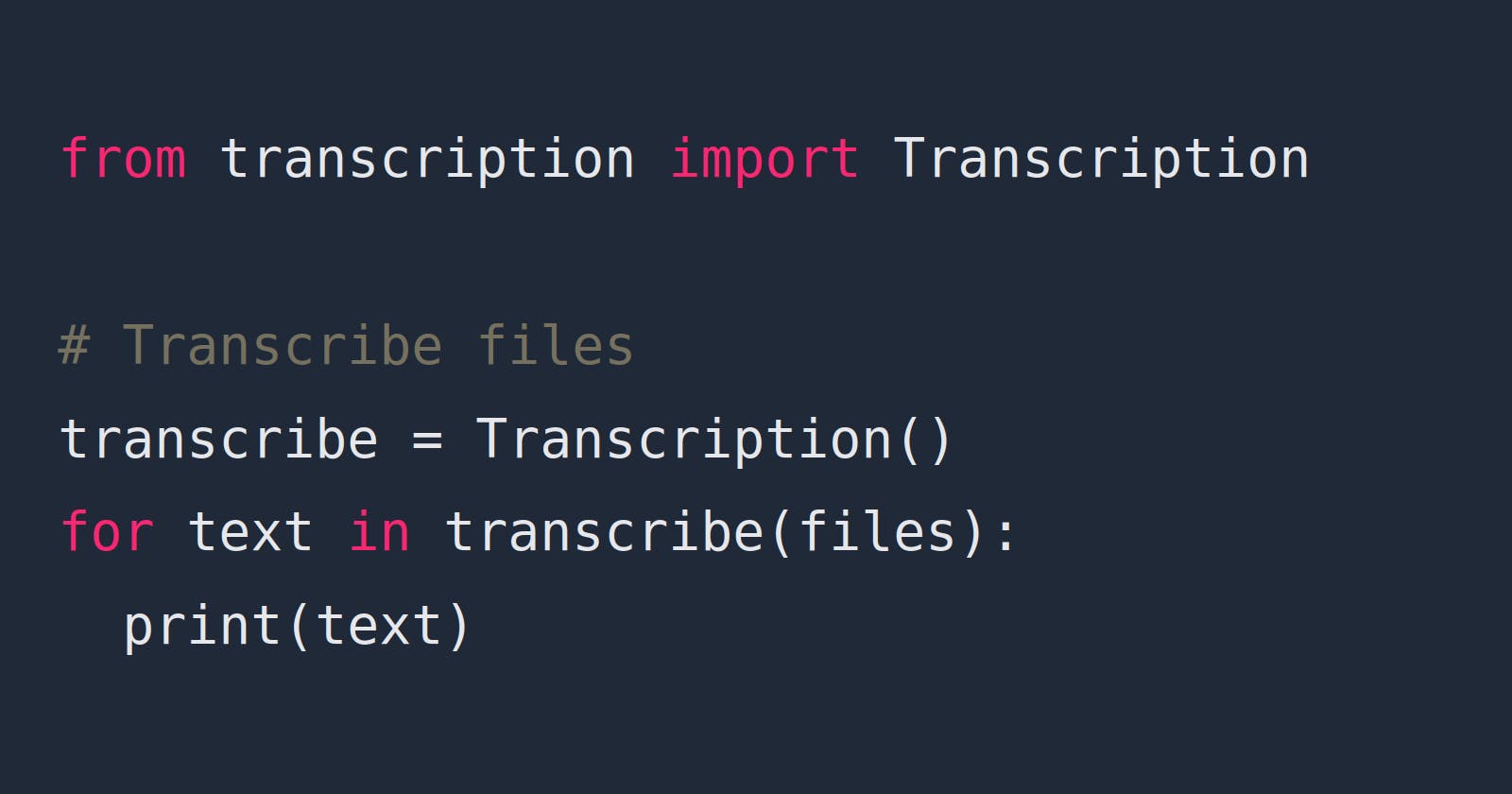

Create a Transcription instance

The Transcription instance is the main entrypoint for transcribing audio to text. The pipeline abstracts transcribing audio into a one line call!

The pipeline executes logic to read audio files into memory, run the data through a machine learning model and output the results to text.

from txtai.pipeline import Transcription

# Create transcription model

transcribe = Transcription()

Transcribe audio to text

The example below shows how to transcribe a list of audio files to text. Let's transcribe audio to text and look at each result.

from IPython.display import Audio, display

files = ["Beijing_mobilises.wav", "Canadas_last_fully.wav", "Maine_man_wins_1_mil.wav", "Make_huge_profits.wav", "The_National_Park.wav", "US_tops_5_million.wav"]

files = ["txtai/%s" % x for x in files]

for x, text in enumerate(transcribe(files)):

display(Audio(files[x]))

print(text)

print()

Baging mobilizes invasion kraft along coast as tie one tensions escalates

Canodas last fully intact ice shelf has suddenly collapsed forming a manhattan sized iceberge

Main man wins from lottery ticket

Make huge profits without working make up to one hundred thousand dollars a day

National park service warns against sacrificing slower friends in a bare attack

Ues virus cases top a million

Overall, the results are solid. Each result sounds phonetically like the audio.

OpenAI Whisper

In September 2022, OpenAI Whisper was released. This model brings a dramatic improvement in transcription quality. Whisper support was added to Hugging Face Transformers in v4.23.0. Let's give it a try.

transcribe = Transcription("openai/whisper-base")

for text in transcribe(files):

print(text)

Beijing mobilizes invasion craft along coast as Taiwan tensions escalate.

Canada's last fully intact ice shelf has suddenly collapsed, forming a Manhattan sized iceberg.

Maine Man wins from lottery ticket.

make huge profits without working. Make up to $100,000 a day.

National Park Service warns against sacrificing slower friends in a bear attack.

U.S. virus cases top of million.

Results were transcribed with near perfect accuracy, amazing!

This can also be run as a txtai application or API instance. Let's try a full indexing workflow with a txtai application.

writable: true

embeddings:

path: sentence-transformers/nli-mpnet-base-v2

content: true

transcription:

path: openai/whisper-base

workflow:

index:

tasks:

- transcription

- index

from txtai.app import Application

app = Application("workflow.yml")

list(app.workflow("index", files))

app.search("feel good story", 1)

[{'id': '2',

'text': 'Maine Man wins from lottery ticket.',

'score': 0.1285860687494278}]

This workflow transcribed the input files, loaded the transcriptions into an embeddings index and finally ran a search. Last thing we'll do is run the workflow as an API instance.

CONFIG=workflow.yml uvicorn "txtai.api:app" &> api.log &

sleep 30

# Run indexing workflow

curl -s -o /dev/null \

-X POST "http://localhost:8000/workflow" \

-H "Content-Type: application/json" \

-d '{"name":"index", "elements":["txtai/Beijing_mobilises.wav", "txtai/Canadas_last_fully.wav", "txtai/Maine_man_wins_1_mil.wav", "txtai/Make_huge_profits.wav", "txtai/The_National_Park.wav", "txtai/US_tops_5_million.wav"]}'

# Test API search

curl "http://localhost:8000/search?query=feel+good+story&limit=1"

[{"id":"2","text":"Maine Man wins from lottery ticket.","score":0.1285860687494278}]

Once again, the same results as in Python and with an application.

Wrapping up

There is a lot of development in the audio transcription space. In only a couple of lines of code, high-quality transcription models are now readily available!