Query translation

Domain-specific natural language queries with query translation

txtai supports two main types of queries: natural language statements and SQL statements. Natural language queries handles a search engine like query. SQL statements enable more complex filtering, sorting and column selection. Query translation bridges the gap between the two and enables filtering for natural language queries.

For example, the query:

Tell me a feel good story since yesterday

becomes

select * from txtai where similar("Tell me a feel good story") and

entry >= date('now', '-1 day')

Install dependencies

Install txtai and all dependencies.

pip install txtai[pipeline]

Create index

Let's first recap how to create an index. We'll use the classic txtai example.

from txtai.embeddings import Embeddings

data = ["US tops 5 million confirmed virus cases",

"Canada's last fully intact ice shelf has suddenly collapsed, forming a Manhattan-sized iceberg",

"Beijing mobilises invasion craft along coast as Taiwan tensions escalate",

"The National Park Service warns against sacrificing slower friends in a bear attack",

"Maine man wins $1M from $25 lottery ticket",

"Make huge profits without work, earn up to $100,000 a day"]

# Create embeddings index with content enabled. The default behavior is to only store indexed vectors.

embeddings = Embeddings({"path": "sentence-transformers/nli-mpnet-base-v2", "content": True})

# Create an index for the list of text

embeddings.index([(uid, text, None) for uid, text in enumerate(data)])

# Run a search

embeddings.search("feel good story", 1)

[{'id': '4',

'score': 0.08329011499881744,

'text': 'Maine man wins $1M from $25 lottery ticket'}]

Query translation models

Next we'll explore how query translation models work with examples.

from txtai.pipeline import Sequences

sequences = Sequences("NeuML/t5-small-txtsql")

queries = [

"feel good story",

"feel good story since yesterday",

"feel good story with lottery in text",

"how many feel good story",

"feel good story translated to fr",

"feel good story summarized"

]

# Prefix to pass to T5 model

prefix = "translate English to SQL: "

for query in queries:

print(f"Input: {query}")

print(f"SQL: {sequences(query, prefix)}")

print()

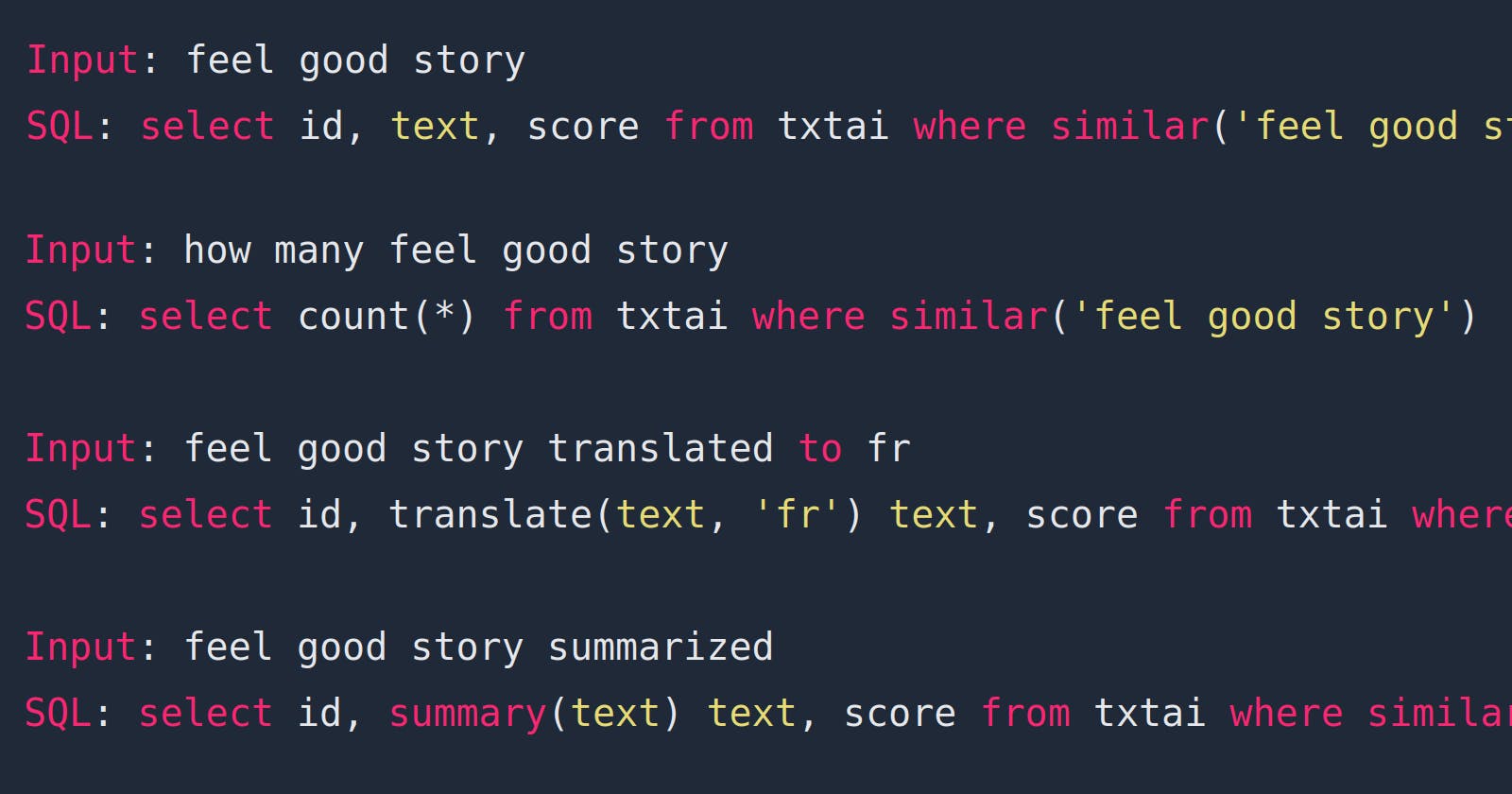

Input: feel good story

SQL: select id, text, score from txtai where similar('feel good story')

Input: feel good story since yesterday

SQL: select id, text, score from txtai where similar('feel good story') and entry >= date('now', '-1 day')

Input: feel good story with lottery in text

SQL: select id, text, score from txtai where similar('feel good story') and text like '% lottery%'

Input: how many feel good story

SQL: select count(*) from txtai where similar('feel good story')

Input: feel good story translated to fr

SQL: select id, translate(text, 'fr') text, score from txtai where similar('feel good story')

Input: feel good story summarized

SQL: select id, summary(text) text, score from txtai where similar('feel good story')

Looking at the query translations above gives an idea on how this model works.

t5-small-txtsql is the default model. Custom domain query syntax languages can be created using this same methodology, including for other languages. Natural language can be translated to functions, query clauses, column selection and more!

Natural language filtering

Now it's time for this in action! Let's first initialize the embeddings index with the appropriate settings.

from txtai.pipeline import Translation

def translate(text, lang):

return translation(text, lang)

translation = Translation()

# Create embeddings index with content enabled. The default behavior is to only store indexed vectors.

embeddings = Embeddings({"path": "sentence-transformers/nli-mpnet-base-v2",

"content": True,

"query": {"path": "NeuML/t5-small-txtsql"},

"functions": [translate]})

# Create an index for the list of text

embeddings.index([(uid, text, None) for uid, text in enumerate(data)])

query = "select id, score, translate(text, 'de') 'text' from txtai where similar('feel good story')"

# Run a search using a custom SQL function

embeddings.search(query)[0]

{'id': '4',

'score': 0.08329011499881744,

'text': 'Maine Mann gewinnt $1M von $25 Lotterie-Ticket'}

Note how the query model was provided as a embeddings index configuration parameter. Custom SQL functions were also added in. Let's now see if the same SQL statement can be run with a natural language query.

embeddings.search("feel good story translated to de")[0]

{'id': '4',

'score': 0.08329011499881744,

'text': 'Maine Mann gewinnt $1M von $25 Lotterie-Ticket'}

Same result. Let's try a few more.

embeddings.search("feel good story since yesterday")[0]

{'id': '4',

'score': 0.08329011499881744,

'text': 'Maine man wins $1M from $25 lottery ticket'}

embeddings.search("feel good story with lottery in text")[0]

{'id': '4',

'score': 0.08329011499881744,

'text': 'Maine man wins $1M from $25 lottery ticket'}

For good measure, a couple queries with filters that return no results.

embeddings.search("feel good story with missing in text")

[]

embeddings.search("feel good story with field equal 14")

[]

Query translation with applications

Of course this is all available with YAML-configured applications.

config = """

translation:

writable: true

embeddings:

path: sentence-transformers/nli-mpnet-base-v2

content: true

query:

path: NeuML/t5-small-txtsql

functions:

- {name: translate, argcount: 2, function: translation}

"""

from txtai.app import Application

# Build application and index data

app = Application(config)

app.add([{"id": x, "text": row} for x, row in enumerate(data)])

app.index()

# Run search query

app.search("feel good story translated to de")[0]

{'id': '4',

'score': 0.08329011499881744,

'text': 'Maine Mann gewinnt $1M von $25 Lotterie-Ticket'}

Wrapping up

This article introduced natural language filtering with query translation models. This powerful feature adds filtering and pipelines to natural language statements. Custom domain-specific query languages can be created to enable rich queries natively in txtai.