Entity extraction is the process of identifying names, locations, organizations and other entity-like tokens in unstructured text. Entity extraction can organize data into topics and/or feed downstream machine learning pipelines.

This notebook will show how to use the entity extraction pipeline in txtai with workflows.

Install dependencies

Install txtai and all dependencies.

pip install txtai

Extract entities

Let's get right to it! The following example creates an entity pipeline and extracts entities from text.

from txtai.pipeline import Entity

data = ["US tops 5 million confirmed virus cases",

"Canada's last fully intact ice shelf has suddenly collapsed, forming a Manhattan-sized iceberg",

"Beijing mobilises invasion craft along coast as Taiwan tensions escalate",

"The National Park Service warns against sacrificing slower friends in a bear attack",

"Maine man wins $1M from $25 lottery ticket",

"Make huge profits without work, earn up to $100,000 a day"]

entity = Entity()

for x, e in enumerate(entity(data)):

print(data[x])

print(f" {e}", "\n")

US tops 5 million confirmed virus cases

[('US', 'LOC', 0.999273955821991)]

Canada's last fully intact ice shelf has suddenly collapsed, forming a Manhattan-sized iceberg

[('Canada', 'LOC', 0.999609649181366), ('Manhattan', 'MISC', 0.651396632194519)]

Beijing mobilises invasion craft along coast as Taiwan tensions escalate

[('Beijing', 'LOC', 0.9996659755706787), ('Taiwan', 'LOC', 0.9996755123138428)]

The National Park Service warns against sacrificing slower friends in a bear attack

[('National Park Service', 'ORG', 0.9993489384651184)]

Maine man wins $1M from $25 lottery ticket

[('Maine', 'LOC', 0.9987521171569824)]

Make huge profits without work, earn up to $100,000 a day

[]

The section above is running an entity extraction pipeline for each row in data. The outputs are the token(s) identified as part of an entity, the type of entity and score or confidence in the prediction.

Feed entities to a workflow

The next section demonstrates how the entity extraction pipeline can be used as part of a workflow. This workflow uses the output entities and builds an embeddings index for each row. This effectively computes entity embeddings to compare the row similarity with a focus on mentioned entities.

from txtai.embeddings import Embeddings, Documents

from txtai.workflow import Workflow, Task

# Create workflow with an entity pipeline output into a documents collection

documents = Documents()

workflow = Workflow([Task(lambda x: entity(x, flatten=True, join=True)), Task(documents.add, unpack=False)])

# Run workflow

for _ in workflow([(x, row, None) for x, row in enumerate(data)]):

pass

embeddings = Embeddings({"path": "sentence-transformers/nli-mpnet-base-v2"})

embeddings.index(documents)

for query in ["North America", "Asia Pacific"]:

index = embeddings.search(query, 1)[0][0]

print(query, "\t", data[index])

North America Canada's last fully intact ice shelf has suddenly collapsed, forming a Manhattan-sized iceberg

Asia Pacific Beijing mobilises invasion craft along coast as Taiwan tensions escalate

Run workflow YAML

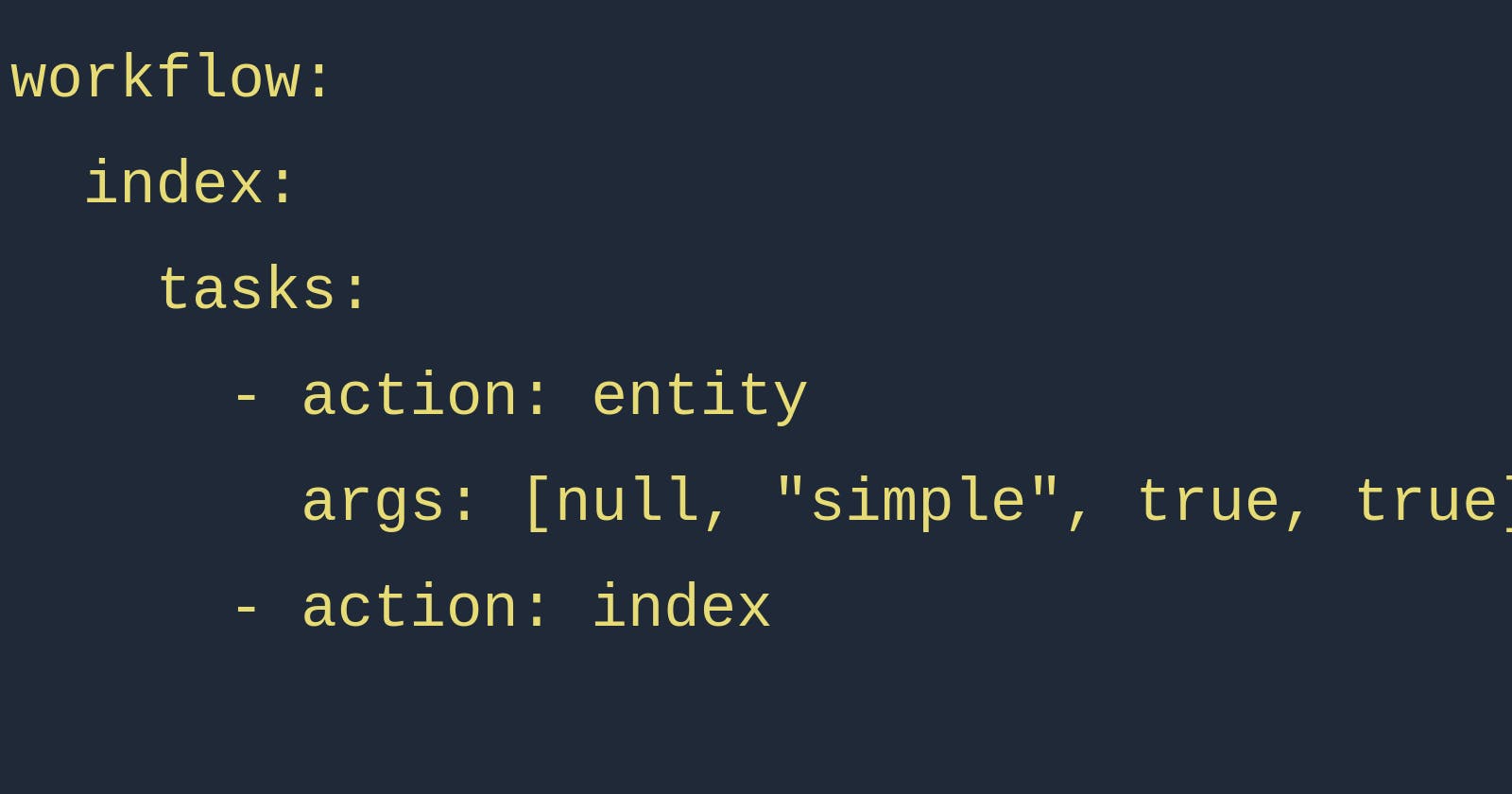

Below is the same example using workflow YAML.

workflow = """

writable: true

embeddings:

path: sentence-transformers/nli-mpnet-base-v2

entity:

workflow:

index:

tasks:

- action: entity

args: [null, "simple", true, true]

- action: index

"""

from txtai.app import Application

# Create and run workflow

app = Application(workflow)

for _ in app.workflow("index", [(x, row, None) for x, row in enumerate(data)]):

pass

# Run queries

for query in ["North America", "Asia Pacific"]:

index = app.search(query)[0]["id"]

print(query, "\t", data[index])

North America Canada's last fully intact ice shelf has suddenly collapsed, forming a Manhattan-sized iceberg

Asia Pacific Beijing mobilises invasion craft along coast as Taiwan tensions escalate

Wrapping up

This notebook introduced entity extraction pipelines with txtai. This pipeline supports a number of different configurations to help feed downstream systems and/or directly use the entities.

As with other pipelines, the entity extraction pipeline can be used standalone in Python, as an API service or as part of a workflow!