Embeddings SQL custom functions

Add user-defined functions to Embeddings SQL

txtai 4.0 added support for SQL-based embeddings queries. This feature combines natural language queries for similarity with concrete filtering rules. txtai now has support for user-defined SQL functions, making this feature even more powerful.

Install dependencies

Install txtai and all dependencies.

pip install txtai[pipeline]

Create index

Let's first recap how to create an index. We'll use the classic txtai example.

from txtai.embeddings import Embeddings

data = ["US tops 5 million confirmed virus cases",

"Canada's last fully intact ice shelf has suddenly collapsed, forming a Manhattan-sized iceberg",

"Beijing mobilises invasion craft along coast as Taiwan tensions escalate",

"The National Park Service warns against sacrificing slower friends in a bear attack",

"Maine man wins $1M from $25 lottery ticket",

"Make huge profits without work, earn up to $100,000 a day"]

# Create embeddings index with content enabled. The default behavior is to only store indexed vectors.

embeddings = Embeddings({"path": "sentence-transformers/nli-mpnet-base-v2", "content": True})

# Create an index for the list of text

embeddings.index([(uid, text, None) for uid, text in enumerate(data)])

# Run a search

embeddings.search("feel good story", 1)

[{'id': '4',

'score': 0.08329004049301147,

'text': 'Maine man wins $1M from $25 lottery ticket'}]

Custom SQL functions

Next, we'll recreate the index adding user-defined SQL functions. These functions are simply Python callable objects or functions that take an input and return values. Pipelines, workflows, custom tasks and any other callable object is supported.

def clength(text):

return len(text) if text else 0

# Create embeddings index with content enabled. The default behavior is to only store indexed vectors.

embeddings = Embeddings({"path": "sentence-transformers/nli-mpnet-base-v2", "content": True, "functions": [clength]})

# Create an index for the list of text

embeddings.index([(uid, text, None) for uid, text in enumerate(data)])

# Run a search using a custom SQL function

embeddings.search("select clength(text) clength, length(text) length, text from txtai where similar('feel good story')", 1)

[{'clength': 42,

'length': 42,

'text': 'Maine man wins $1M from $25 lottery ticket'}]

The function itself is simple, it's just alternate length function. But this example is just warming us up to what is possible and what is more exciting.

Pipelines in SQL

As mentioned above, any callable can be registered as a custom SQL function. Let's add a translate SQL function.

from txtai.pipeline import Translation

# Translation pipeline

translate = Translation()

# Create embeddings index with content enabled. The default behavior is to only store indexed vectors.

embeddings = Embeddings({"path": "sentence-transformers/nli-mpnet-base-v2", "content": True, "functions": [translate]})

# Create an index for the list of text

embeddings.index([(uid, text, None) for uid, text in enumerate(data)])

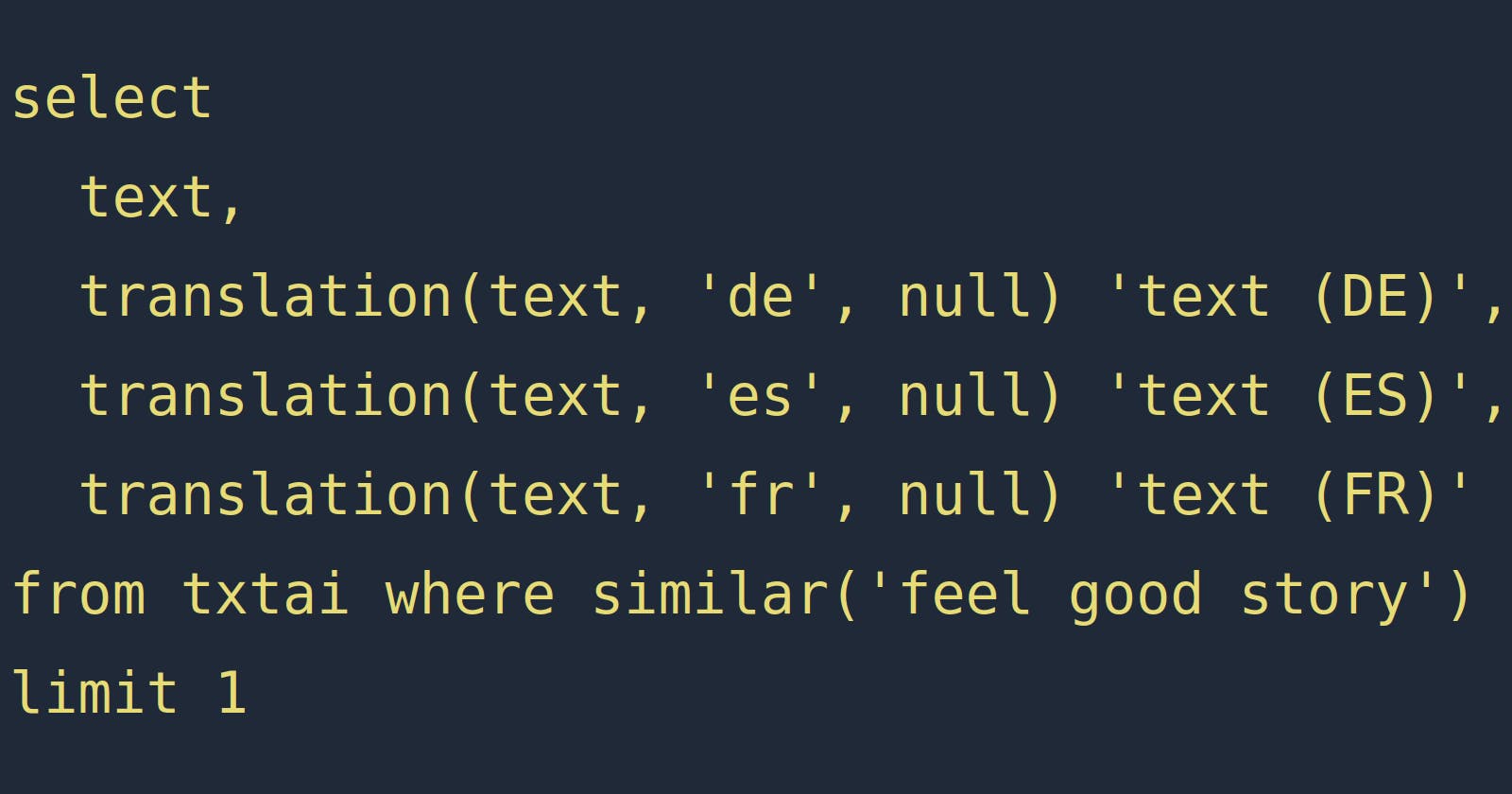

query = """

select

text,

translation(text, 'de', null) 'text (DE)',

translation(text, 'es', null) 'text (ES)',

translation(text, 'fr', null) 'text (FR)'

from txtai where similar('feel good story')

limit 1

"""

# Run a search using a custom SQL function

embeddings.search(query)

[{'text': 'Maine man wins $1M from $25 lottery ticket',

'text (DE)': 'Maine Mann gewinnt $1M von $25 Lotterie-Ticket',

'text (ES)': 'Maine hombre gana $1M de billete de lotería de $25',

'text (FR)': 'Maine homme gagne $1M à partir de $25 billet de loterie'}]

And just like that we have translations through SQL! This is pretty 🔥🔥🔥

We can do more to make this easier though. Let's define a helper function to not require as many parameters. The default logic will require all function parameters each call, including parameters with default values.

def translation(text, lang):

return translate(text, lang)

# Create embeddings index with content enabled. The default behavior is to only store indexed vectors.

embeddings = Embeddings({"path": "sentence-transformers/nli-mpnet-base-v2", "content": True, "functions": [translation]})

# Create an index for the list of text

embeddings.index([(uid, text, None) for uid, text in enumerate(data)])

query = """

select

text,

translation(text, 'de') 'text (DE)',

translation(text, 'es') 'text (ES)',

translation(text, 'fr') 'text (FR)'

from txtai where similar('feel good story')

limit 1

"""

# Run a search using a custom SQL function

embeddings.search(query)

[{'text': 'Maine man wins $1M from $25 lottery ticket',

'text (DE)': 'Maine Mann gewinnt $1M von $25 Lotterie-Ticket',

'text (ES)': 'Maine hombre gana $1M de billete de lotería de $25',

'text (FR)': 'Maine homme gagne $1M à partir de $25 billet de loterie'}]

Custom SQL functions with applications

Of course this is all available with YAML-configured applications.

config = """

translation:

writable: true

embeddings:

path: sentence-transformers/nli-mpnet-base-v2

content: true

functions:

- {name: translation, argcount: 2, function: translation}

"""

from txtai.app import Application

# Build application and index data

app = Application(config)

app.add([{"id": x, "text": row} for x, row in enumerate(data)])

app.index()

# Run search with custom SQL

app.search(query)

[{'text': 'Maine man wins $1M from $25 lottery ticket',

'text (DE)': 'Maine Mann gewinnt $1M von $25 Lotterie-Ticket',

'text (ES)': 'Maine hombre gana $1M de billete de lotería de $25',

'text (FR)': 'Maine homme gagne $1M à partir de $25 billet de loterie'}]

Wrapping up

This article introduced running user-defined custom SQL functions through embeddings SQL. This powerful feature can be used with any callable function including pipelines, tasks and workflows in tandem with similarity and rules filters.