Customize your own embeddings database

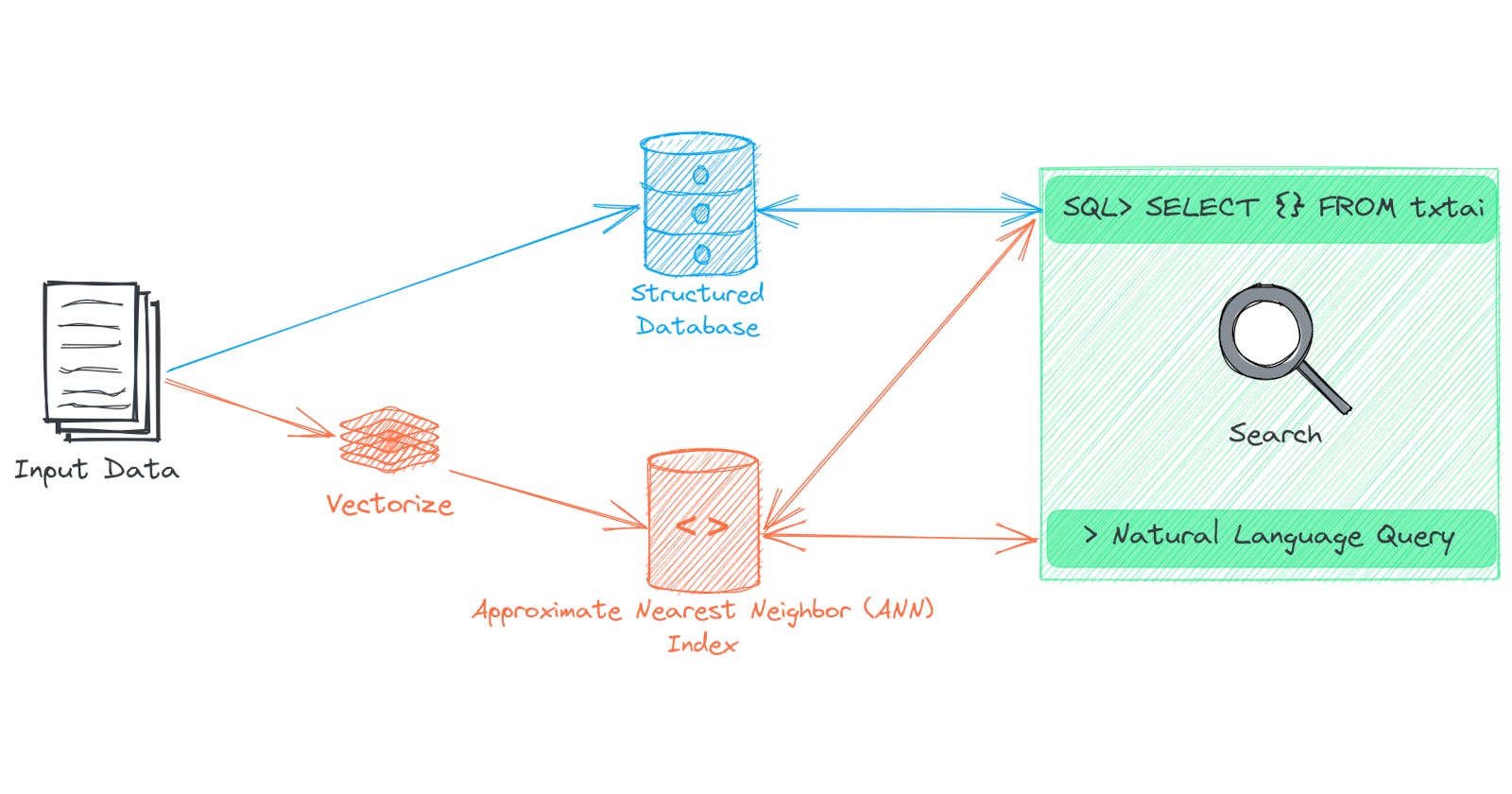

Ways to combine vector indexes with relational databases

txtai supports a number of different database and vector index backends, including external databases. With modern hardware, it's amazing how far a single node index can take us. Easily into the hundreds of millions and even billions of records.

txtai provides maximum flexibility in creating your own embeddings database. Sensible defaults are used out of the box. So unless you seek out this configuration, it's not necessary. This article will explore the options available when you do want to customize your embeddings database.

More on embeddings configuration settings can be found here.

Install dependencies

Install txtai and all dependencies.

# Install txtai

pip install txtai[database,similarity] datasets

Load dataset

This example will use the ag_news dataset, which is a collection of news article headlines. We'll use a subset of 25,000 headlines.

import timeit

from datasets import load_dataset

def timer(embeddings, query="red sox"):

elapsed = timeit.timeit(lambda: embeddings.search(query), number=250)

print(f"{elapsed / 250} seconds per query")

dataset = load_dataset("ag_news", split="train")["text"][:25000]

NumPy

Let's start with the simplest possible embeddings database. This will just be a thin wrapper around vectorizing text with sentence-transformers, storing the results as a NumPy array and running similarity queries.

from txtai.embeddings import Embeddings

# Create embeddings instance

embeddings = Embeddings({"path": "sentence-transformers/all-MiniLM-L6-v2", "backend": "numpy"})

# Index data

embeddings.index((x, text, None) for x, text in enumerate(dataset))

embeddings.search("red sox")

[(19831, 0.6780003309249878),

(18302, 0.6639199256896973),

(16370, 0.6617192029953003)]

embeddings.info()

{

"backend": "numpy",

"build": {

"create": "2023-05-04T12:12:02Z",

"python": "3.10.11",

"settings": {

"numpy": "1.22.4"

},

"system": "Linux (x86_64)",

"txtai": "5.6.0"

},

"dimensions": 384,

"offset": 25000,

"path": "sentence-transformers/all-MiniLM-L6-v2",

"update": "2023-05-04T12:12:02Z"

}

The embeddings instance above vectorizes the text and stores the content as a NumPy array. Array index positions are returned with similarity scores. While the same can easily be done using sentence-transformers, using the txtai framework makes it easy to swap out different options as seen next.

SQLite and NumPy

The next combination we'll test is a SQLite database with a NumPy array.

# Create embeddings instance

embeddings = Embeddings({"path": "sentence-transformers/all-MiniLM-L6-v2", "content": "sqlite", "backend": "numpy"})

# Index data

embeddings.index((x, text, None) for x, text in enumerate(dataset))

Now let's run a search.

embeddings.search("red sox")

[{'id': '19831',

'text': 'Boston Red Sox Team Report - September 6 (Sports Network) - Two of the top teams in the American League tangle in a possible American League Division Series preview tonight, as the West-leading Oakland Athletics host the wild card-leading Boston Red Sox for the first of a three-game set at the ',

'score': 0.6780003309249878},

{'id': '18302',

'text': 'BASEBALL: RED-HOT SOX CLIP THE ANGELS #39; WINGS BOSTON RED SOX fans are enjoying their best week of the season. While their beloved team swept wild-card rivals Anaheim in a three-game series to establish a nine-game winning streak, the hated New York Yankees endured the heaviest loss in their history.',

'score': 0.6639199256896973},

{'id': '16370',

'text': 'Boston Red Sox Team Report - September 1 (Sports Network) - The red-hot Boston Red Sox hope to continue rolling as they continue their three-game set with the Anaheim Angels this evening at Fenway Park.',

'score': 0.6617192029953003}]

embeddings.info()

{

"backend": "numpy",

"build": {

"create": "2023-05-04T12:12:24Z",

"python": "3.10.11",

"settings": {

"numpy": "1.22.4"

},

"system": "Linux (x86_64)",

"txtai": "5.6.0"

},

"content": "sqlite",

"dimensions": 384,

"offset": 25000,

"path": "sentence-transformers/all-MiniLM-L6-v2",

"update": "2023-05-04T12:12:24Z"

}

Same results as before. The only difference is the content is now available via the associated SQLite database.

Let's inspect the ANN object to see how it looks.

print(embeddings.ann.backend.shape)

print(type(embeddings.ann.backend))

(25000, 384)

<class 'numpy.memmap'>

As expected, it's a NumPy array. Let's calculate how long a search query takes to execute.

timer(embeddings)

0.03392000120000011 seconds per query

Not too bad at all!

SQLite and PyTorch

Let's now try a PyTorch backend.

# Create embeddings instance

embeddings = Embeddings({"path": "sentence-transformers/all-MiniLM-L6-v2", "content": "sqlite", "backend": "torch"})

# Index data

embeddings.index((x, text, None) for x, text in enumerate(dataset))

Let's run a search again.

embeddings.search("red sox")

[{'id': '19831',

'text': 'Boston Red Sox Team Report - September 6 (Sports Network) - Two of the top teams in the American League tangle in a possible American League Division Series preview tonight, as the West-leading Oakland Athletics host the wild card-leading Boston Red Sox for the first of a three-game set at the ',

'score': 0.678000271320343},

{'id': '18302',

'text': 'BASEBALL: RED-HOT SOX CLIP THE ANGELS #39; WINGS BOSTON RED SOX fans are enjoying their best week of the season. While their beloved team swept wild-card rivals Anaheim in a three-game series to establish a nine-game winning streak, the hated New York Yankees endured the heaviest loss in their history.',

'score': 0.6639199256896973},

{'id': '16370',

'text': 'Boston Red Sox Team Report - September 1 (Sports Network) - The red-hot Boston Red Sox hope to continue rolling as they continue their three-game set with the Anaheim Angels this evening at Fenway Park.',

'score': 0.6617191433906555}]

embeddings.info()

{

"backend": "torch",

"build": {

"create": "2023-05-04T12:12:53Z",

"python": "3.10.11",

"settings": {

"torch": "2.0.0+cu118"

},

"system": "Linux (x86_64)",

"txtai": "5.6.0"

},

"content": "sqlite",

"dimensions": 384,

"offset": 25000,

"path": "sentence-transformers/all-MiniLM-L6-v2",

"update": "2023-05-04T12:12:53Z"

}

And once against inspect the ANN object.

print(embeddings.ann.backend.shape)

print(type(embeddings.ann.backend))

torch.Size([25000, 384])

<class 'torch.Tensor'>

As expected, this time the backend is a Torch tensor. Next we'll calculate the average search time.

timer(embeddings)

0.021084972200000267 seconds per query

A bit faster since Torch uses the GPU to compute the similarity matrix.

SQLite and Faiss

Now lets run the same code with the standard txtai settings of Faiss + SQLite.

# Create embeddings instance

embeddings = Embeddings({"path": "sentence-transformers/all-MiniLM-L6-v2", "content": True})

# Index data

embeddings.index((x, text, None) for x, text in enumerate(dataset))

embeddings.search("red sox")

[{'id': '19831',

'text': 'Boston Red Sox Team Report - September 6 (Sports Network) - Two of the top teams in the American League tangle in a possible American League Division Series preview tonight, as the West-leading Oakland Athletics host the wild card-leading Boston Red Sox for the first of a three-game set at the ',

'score': 0.6780003309249878},

{'id': '18302',

'text': 'BASEBALL: RED-HOT SOX CLIP THE ANGELS #39; WINGS BOSTON RED SOX fans are enjoying their best week of the season. While their beloved team swept wild-card rivals Anaheim in a three-game series to establish a nine-game winning streak, the hated New York Yankees endured the heaviest loss in their history.',

'score': 0.6639199256896973},

{'id': '16370',

'text': 'Boston Red Sox Team Report - September 1 (Sports Network) - The red-hot Boston Red Sox hope to continue rolling as they continue their three-game set with the Anaheim Angels this evening at Fenway Park.',

'score': 0.6617192029953003}]

embeddings.info()

{

"backend": "faiss",

"build": {

"create": "2023-05-04T12:13:23Z",

"python": "3.10.11",

"settings": {

"components": "IVF632,Flat"

},

"system": "Linux (x86_64)",

"txtai": "5.6.0"

},

"content": true,

"dimensions": 384,

"offset": 25000,

"path": "sentence-transformers/all-MiniLM-L6-v2",

"update": "2023-05-04T12:13:23Z"

}

timer(embeddings)

0.008729957724000087 seconds per query

Everything lines up with the previous examples. Note that Faiss is faster, given it's a vector index. For 25,000 records, the different is negligible but vector index performance increases rapidly for datasets in the million+ range.

SQLite and HNSW

While txtai strives to keep things as simple as possible with many common default settings out of the box, customizing the backend options can lead to increased performance. The next example will store vectors in a HNSW index and customize the index options.

# Create embeddings instance

embeddings = Embeddings({"path": "sentence-transformers/all-MiniLM-L6-v2", "content": True, "backend": "hnsw", "hnsw": {"m": 32}})

# Index data

embeddings.index((x, text, None) for x, text in enumerate(dataset))

embeddings.search("red sox")

[{'id': '19831',

'text': 'Boston Red Sox Team Report - September 6 (Sports Network) - Two of the top teams in the American League tangle in a possible American League Division Series preview tonight, as the West-leading Oakland Athletics host the wild card-leading Boston Red Sox for the first of a three-game set at the ',

'score': 0.6780003309249878},

{'id': '18302',

'text': 'BASEBALL: RED-HOT SOX CLIP THE ANGELS #39; WINGS BOSTON RED SOX fans are enjoying their best week of the season. While their beloved team swept wild-card rivals Anaheim in a three-game series to establish a nine-game winning streak, the hated New York Yankees endured the heaviest loss in their history.',

'score': 0.6639198660850525},

{'id': '16370',

'text': 'Boston Red Sox Team Report - September 1 (Sports Network) - The red-hot Boston Red Sox hope to continue rolling as they continue their three-game set with the Anaheim Angels this evening at Fenway Park.',

'score': 0.6617192029953003}]

embeddings.info()

{

"backend": "hnsw",

"build": {

"create": "2023-05-04T12:13:59Z",

"python": "3.10.11",

"settings": {

"efconstruction": 200,

"m": 32,

"seed": 100

},

"system": "Linux (x86_64)",

"txtai": "5.6.0"

},

"content": true,

"deletes": 0,

"dimensions": 384,

"hnsw": {

"m": 32

},

"metric": "ip",

"offset": 25000,

"path": "sentence-transformers/all-MiniLM-L6-v2",

"update": "2023-05-04T12:13:59Z"

}

timer(embeddings)

0.006160191656000279 seconds per query

Once again, everything matches up with the previous examples. There is a negligible performance difference vs Faiss.

Hnswlib powers a number of popular vector databases. It's definitely an option worth evaluating.

External Vectorization

txtai has a number of built-in vectorizers backed by Hugging Face Transformers and Sentence Transformers. Just like other txtai modules, vectorization can also be customized.

The next example uses the Hugging Face Inference API to vectorize text.

import numpy as np

import requests

BASE = "https://api-inference.huggingface.co/pipeline/feature-extraction"

def transform(inputs):

# Your API provider of choice

response = requests.post(f"{BASE}/sentence-transformers/all-MiniLM-L6-v2", json={"inputs": inputs})

return np.array(response.json(), dtype=np.float32)

embeddings = Embeddings({"transform": transform, "backend": "numpy", "content": True})

embeddings.index([(0, "sunny", None), (1, "rainy", None)])

embeddings.search("nice day")

[{'id': '0', 'text': 'sunny', 'score': 0.28077083826065063},

{'id': '1', 'text': 'rainy', 'score': 0.18051263689994812}]

Configuration storage

Configuration is passed to an embeddings instance as a dictionary. When saving an embeddings instance, the default behavior is to save configuration as a pickled object. JSON can alternatively be used.

# Create embeddings instance

embeddings = Embeddings({"path": "sentence-transformers/all-MiniLM-L6-v2", "content": True, "format": "json"})

# Index data

embeddings.index((x, text, None) for x, text in enumerate(dataset))

# Save embeddings

embeddings.save("index")

!cat index/config.json

{

"path": "sentence-transformers/all-MiniLM-L6-v2",

"content": true,

"format": "json",

"dimensions": 384,

"backend": "faiss",

"offset": 25000,

"build": {

"create": "2023-05-04T12:14:25Z",

"python": "3.10.11",

"settings": {

"components": "IVF632,Flat"

},

"system": "Linux (x86_64)",

"txtai": "5.6.0"

},

"update": "2023-05-04T12:14:25Z"

}

Looking at the stored configuration, it's almost identical to an embeddings.info() call. This is by design, JSON configuration is designed to be human-readable. This is a good option when sharing an embeddings database on the Hugging Face Hub.

SQLite vs DuckDB

The last thing we'll explore is the database backend.

SQLite is a row-oriented database, DuckDB is column-oriented. This design difference is important to note and a factor to consider when evaluating the expected workload. Let's explore.

# Create embeddings instance

embeddings = Embeddings({"path": "sentence-transformers/all-MiniLM-L6-v2", "content": "sqlite"})

# Index data

embeddings.index((x, text, None) for x, text in enumerate(dataset))

timer(embeddings, "SELECT text FROM txtai where id = 3980")

0.0001413383999997677 seconds per query

timer(embeddings, "SELECT count(*), text FROM txtai group by text order by count(*) desc")

0.03718761139199978 seconds per query

# Create embeddings instance

embeddings = Embeddings({"path": "sentence-transformers/all-MiniLM-L6-v2", "content": "duckdb"})

# Index data

embeddings.index((x, text, None) for x, text in enumerate(dataset))

timer(embeddings, "SELECT text FROM txtai where id = 3980")

0.002780103128000519 seconds per query

timer(embeddings, "SELECT count(*), text FROM txtai group by text order by count(*) desc")

0.01854579007600023 seconds per query

While the dataset of 25,000 rows is small, we can start to see the differences. SQLite has a much faster single row retrieval time. DuckDB does better with an aggregate query. This is a product of a row-oriented vs column oriented database and a factor to consider when developing a solution.

Wrapping up

This article explored different combinations of database and vector index backends. With modern hardware, it's amazing how far a single node index can take us. Easily into the hundreds of millions and even billions of records. When a hardware bottleneck becomes an issue, external vector databases are one option to consider. Another is building a distributed txtai embeddings cluster.

There is power in simplicity. Many paid services try to convince us that signing up for an API account is the best place to start. In some cases, such as teams with very few to no developers, this is true. But for teams with developers, options like txtai should be evaluated.