Benefits of hybrid search

Improve accuracy with a combination of semantic and keyword search

Semantic search is a new category of search built on recent advances in Natural Language Processing (NLP). Traditional search systems use keywords to find data. Semantic search has an understanding of natural language and identifies results that have the same meaning, not necessarily the same keywords.

While semantic search adds amazing capabilities, sparse keyword indexes can still add value. There may be cases where finding an exact match is important or we just want a fast index to quickly do an initial scan of a dataset.

Both methods have their merits. What if we combine them together to build a unified hybrid search capability? Can we get the best of both worlds?

This article will explore the benefits of hybrid search.

Install dependencies

Install txtai and all dependencies.

# Install txtai

pip install txtai pytrec_eval rank-bm25 elasticsearch

Introducing semantic, keyword and hybrid search

Before diving into the benchmarks, let's briefly discuss how semantic and keyword search works.

Semantic search uses large language models to vectorize inputs into arrays of numbers. Similar concepts will have similar values. The vectors are typically stored in a vector database, which is a system that specializes in storing these numerical arrays and finding matches. Vector search transforms an input query into a vector and then runs a search to find the best conceptual results.

Keyword search tokenizes text into lists of tokens per document. These tokens are aggregated into token frequencies per document and stored in term frequency sparse arrays. At search time, the query is tokenized and the tokens of the query are compared to the tokens in the dataset. This is more a literal process. Keyword search is like string matching, it has no conceptual understanding, it matches on characters and bytes.

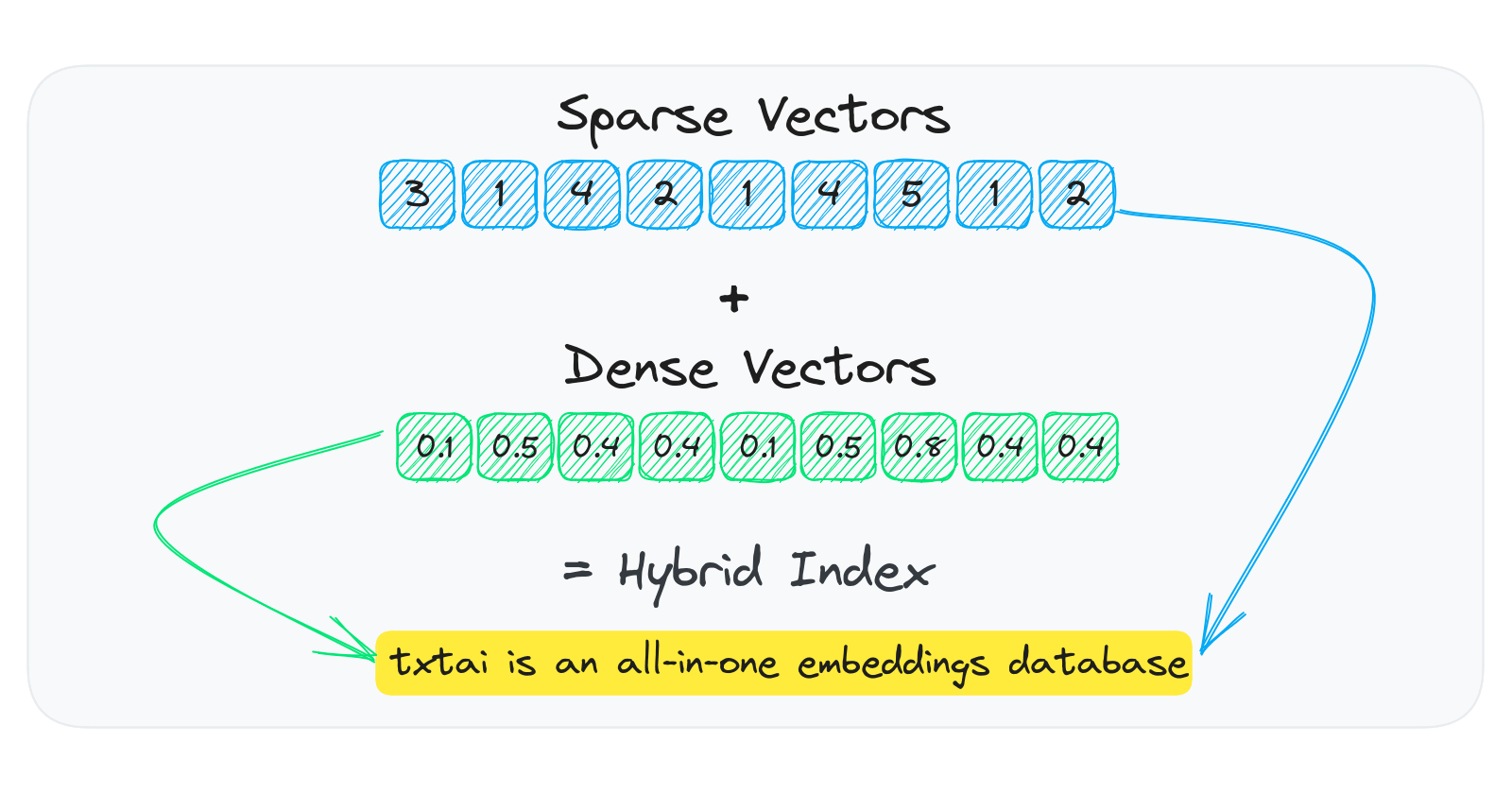

Hybrid search combines the scores from semantic and keyword indexes. Given that semantic search scores are typically 0 - 1 and keyword search scores are unbounded, a method is needed to combine the results.

The two methods supported in txtai are:

Convex Combination when sparse scores are normalized

Reciprocal Rank Fusion (RRF) when sparse scores aren't normalized

The default method in txtai is convex combination and we'll use that.

Evaluating performance

Now it's time to benchmark the results. For these tests, we'll use the BEIR dataset. We'll also use a benchmarks script from the txtai project. This benchmarks script has methods to work with the BEIR dataset.

We'll select a subset of the BEIR sources for brevity. For each source, we'll benchmark a bm25 index, an embeddings index and a hybrid or combined index.

import os

# Get benchmarks script

os.system("wget https://raw.githubusercontent.com/neuml/txtai/master/examples/benchmarks.py")

# Create output directory

os.makedirs("beir", exist_ok=True)

# Download subset of BEIR datasets

datasets = ["nfcorpus", "fiqa", "arguana", "scidocs", "scifact"]

for dataset in datasets:

url = f"https://public.ukp.informatik.tu-darmstadt.de/thakur/BEIR/datasets/{dataset}.zip"

os.system(f"wget {url}")

os.system(f"mv {dataset}.zip beir")

os.system(f"unzip -d beir beir/{dataset}.zip")

# Remove existing benchmark data

if os.path.exists("benchmarks.json"):

os.remove("benchmarks.json")

Now let's run the benchmarks.

# Remove existing benchmark data

if os.path.exists("benchmarks.json"):

os.remove("benchmarks.json")

# Runs benchmark evaluation

def evaluate(method):

for dataset in datasets:

command = f"python benchmarks.py beir {dataset} {method}"

print(command)

os.system(command)

# Calculate benchmarks

for method in ["bm25", "embed", "hybrid"]:

evaluate(method)

import json

import pandas as pd

def benchmarks():

# Read JSON lines data

with open("benchmarks.json") as f:

data = f.read()

df = pd.read_json(data, lines=True).sort_values(by=["source", "ndcg_cut_10"], ascending=[True, False])

return df[["source", "method", "ndcg_cut_10", "map_cut_10", "recall_10", "P_10", "index", "search", "memory"]].reset_index(drop=True)

# Load benchmarks dataframe

df = benchmarks()

df[df.source == "nfcorpus"].reset_index(drop=True)

| source | method | ndcg_cut_10 | map_cut_10 | recall_10 | P_10 | index | search | memory |

| nfcorpus | hybrid | 0.34531 | 0.13369 | 0.17437 | 0.25480 | 29.46 | 3.57 | 2900 |

| nfcorpus | embed | 0.30917 | 0.10810 | 0.15327 | 0.23591 | 33.64 | 3.33 | 2876 |

| nfcorpus | bm25 | 0.30639 | 0.11728 | 0.14891 | 0.21734 | 2.72 | 0.96 | 652 |

df[df.source == "fiqa"].reset_index(drop=True)

| source | method | ndcg_cut_10 | map_cut_10 | recall_10 | P_10 | index | search | memory |

| fiqa | hybrid | 0.36642 | 0.28846 | 0.43799 | 0.10340 | 233.90 | 68.42 | 3073 |

| fiqa | embed | 0.36071 | 0.28450 | 0.43188 | 0.10216 | 212.30 | 58.83 | 2924 |

| fiqa | bm25 | 0.23559 | 0.17619 | 0.29855 | 0.06559 | 19.78 | 12.84 | 76 |

df[df.source == "arguana"].reset_index(drop=True)

| source | method | ndcg_cut_10 | map_cut_10 | recall_10 | P_10 | index | search | memory |

| arguana | hybrid | 0.48467 | 0.40101 | 0.75320 | 0.07532 | 37.80 | 21.22 | 2924 |

| arguana | embed | 0.47781 | 0.38781 | 0.76671 | 0.07667 | 34.11 | 10.21 | 2910 |

| arguana | bm25 | 0.45713 | 0.37118 | 0.73471 | 0.07347 | 3.39 | 10.95 | 663 |

df[df.source == "scidocs"].reset_index(drop=True)

| source | method | ndcg_cut_10 | map_cut_10 | recall_10 | P_10 | index | search | memory |

| scidocs | embed | 0.21718 | 0.12982 | 0.23217 | 0.1146 | 127.63 | 4.41 | 2929 |

| scidocs | hybrid | 0.21104 | 0.12450 | 0.22938 | 0.1134 | 138.00 | 6.43 | 2999 |

| scidocs | bm25 | 0.15063 | 0.08756 | 0.15637 | 0.0772 | 13.07 | 1.42 | 722 |

df[df.source == "scifact"].reset_index(drop=True)

| source | method | ndcg_cut_10 | map_cut_10 | recall_10 | P_10 | index | search | memory |

| scifact | hybrid | 0.71305 | 0.66773 | 0.83722 | 0.09367 | 39.51 | 2.35 | 2918 |

| scifact | bm25 | 0.66324 | 0.61764 | 0.78761 | 0.08700 | 4.40 | 0.93 | 658 |

| scifact | embed | 0.65149 | 0.60193 | 0.78972 | 0.08867 | 35.15 | 1.48 | 2889 |

The sections above show the metrics per source and method.

The table headers list the source (dataset), index method, NDCG@10/MAP@10/RECALL@10/P@10 accuracy metrics, index time(s), search time(s) and memory usage(MB). The tables are sorted by NDCG@10 descending.

Looking at the results, we can see that hybrid search often performs better than embeddings or bm25 individually. In some cases, as with scidocs, the combination performs worse. But in the aggregate, the scores are better. This holds true for the entire BEIR dataset. For some sources, bm25 does best, some embeddings but overall the combined hybrid scores do the best.

Hybrid search isn't free though, it is slower as it has extra logic to combine the results. For individual queries, the results are often negligible.

Wrapping up

This article covered ways to improve search accuracy using a hybrid approach. We evaluated performance over a subset of the BEIR dataset to show how hybrid search, in many situations, can improve overall accuracy.

Custom datasets can also be evaluated using this method as specified in this link. This article and the associated benchmarks script can be reused to evaluate what method works best on your data.